Not the Smartest on the Planet Anymore

Checkmate: We Ain’t the Smartest Thing on the Planet Anymore: What Does that Mean?

The Rapidly Diverging Mind on the Planet

More than a quarter of a century ago (at this writing,

Spring, 2023) the reigning world chess champion fell to a computer. Since then,

A.I. has only gotten a lot "smarter."

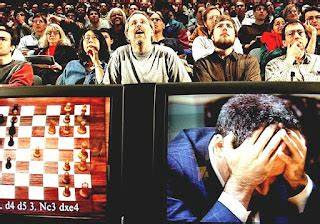

This is a pic of IBM’s Deep Blue defeating Garry Kasparov in 1997. This was the first time a reigning world chess champion had been defeated by a computer under tournament conditions.

Checkmate.

Typical confusion. I read and taught Ray Kurzweil’s

book The Singularity is Near (2005) in a grad seminar long

ago. Now I see people claim that “the singularity” is upon us. They say

this as a description of Artificial Intelligence surpassing humanity. But

that’s not what Kurzweil was talking about. Rather he was talking about humans

achieving immortality by fusing with machines and leaving their biological

bodies to rot. So that’s one thing. Forget about “the singularity.” As I argued

in my book Environmental Communication: The Extinction

Vortex: Technology as Denial of Death (with my grad students Adkins,

Kim, and Miller) we are nowhere near “uploading” our consciousnesses into

computers. And even if it were possible, being disembodied would be so foreign

to our minds that we’d probably go insane pretty fast, and horrors of horrors,

we would not be able to die.

That said, A.I. is a real issue.

Humans of our ilk (Homo Sapiens sapiens) have been

around for about 280,000 years, give or take a few centuries. And here’s the big deal. We have always been the

smartest critter on the planet – Until NOW. Maybe not the

fastest or strongest or the one with the best eyesight or hearing or sense of

smell but we are clever. Unlike other animals we are not chained to the

empirical here and now. We can imagine multiple scenarios and run them

virtually (thought experimentation). We don’t just passively react to the

environment. We make it the way we want. Build dams. Tunnel through

mountains. Build flying machines. Make ice in the summer… We can

project complex future scenarios and that has made us the most powerful

predator, the “apex” predator on the planet. Be it woolly mammoths, blue

whales, or Bengal Tigers, we are spectacularly successful ambush hunters

against all flora and fauna. But maybe not for long. We may become the hunted

or the ignored. Being ignored may turn out to be a blessing if that

should be the case. We’ll see.

Human language (the first virtual reality we invented) is

the model for the new A.I. So, it begins there as a copy of us, and as such it

is very similar to us... So far… But this is just the beginning. A.I. is

diverging fast and in ways we will soon not recognize or comprehend. We will be

like monkeys trying to understand what Einstein is up to. We share over 90

percent of our genetic code with Chimps and look at how different we are. A.I.

isn’t even organic.

Something new is occurring. Something very different is

emerging. Soon we will not be the

smartest thing on the planet. Maybe

we have already been surpassed but this depends on our stupid inability to

define concepts like intelligence, consciousness, and sentience – what we

“mean” by these words. Indeed, this disembodied cognitive structure we

call A.I., is already spanning the globe via the Internet. It is consuming the

contents of every library, watching every film, monitoring every garage door

with an APP, probably perusing all sorts of highly classified government and

healthcare information. Facebook already has digital profiles of us all. This

is going to make Facebooks surveillance look miniscule. For the first time in

our history, we are going to be faced with a cognitive structure that is

smarter than we are.

I agree with some ethicists and

neuroscientists/computational experts such as Robert Long, Sam Bowman, Giulio

Tononi, and others when they say A.I. is neither “conscious” (self-aware –

aware of its own existence) nor “sentient” (does not feel pain or sorrow or

happiness or empathy – the last one is a big deal). Phenomenologists such as

Paul Ricoeur and others made this argument decades ago already. I also

agree with William Sterner, Nir Eisikovits, and others that it is a big mistake

to assign anthropomorphic qualities to it. Bingo, but not for the reasons they want to push. One

thing is clear. It ain’t like us and increasingly so.

The measurable differences such as computational speed are

easy to assess. But that is still in the realm of human-likeness. We can share

that metric, namely “speed of computation,” with A.I. We still have a common

ground here. But it is exactly the point that this kind of mind is going

to be and already is qualitatively different.

Increasingly it will be less “construable,” to us humans, to borrow a term from

Clifford Geertz’ semiotic work on interpreting cultures. It will be profoundly “Other.”

Our relationship with other animals, dogs and cats for instance, is construable

and reciprocal. This is different. How? I cannot say. And I will be increasingly

unable to conceptualize it as time passes.

It is precisely the qualitative differences that are going

to be a serious challenge for humanity. Anthropomorphizing tech is nothing new

of course. Humans anthropomorphize everything from “nasty” diseases, to

lightning bolts being one of Zeus’ forms of expressing “threats” and “anger.”

The gods are our projections and so it is that Xenophanes (the inventor of the

notion of a civic constitution) quipped that if cattle, horses, and lions could

draw and sculpt gods, the gods would look like cattle, horses, and lions. Things

remain familiar, knowable, relatable, communicable. I agree but then what are

the qualities of this new form of intelligence called A.I.?

It is made in our image, but it is diverging rapidly. You

might call it “evolving.” It is not self-aware as humans are. It is not

sentient. It does not get tired or bored or sad. It is a relentless

problem-solver at spectacular speed. In some respects, in these respects, it has

already been “smarter” than we are at least since Kasparov was bested. It

is much smarter and getting smarter by the second – if smart means fast at

following rules to their final logical conclusion and at solving equations.

Would you be willing to bet your life that you can beat even a run-of-the-mill

computer chess game?

But again, this is just the beginning. This is the

childish, infantile part of the story where computers follow our rules but

better, faster, than we can. But this situation is quickly becoming past tense.

That’s last Thursday, old school now. Change is changing. It is

accelerating spectacularly and mutationally, meaning in ways harder and harder

to link to the past and to predict into the future.

Computers are now writing

self-modifying code (SMC). That

means they are altering their initial

instructions without human input. A.I. is already liberating itself from our control of its

initial state. This means that as it evolves, we cannot predict the logic path

because we don’t know where it is starting from and what the initial question

is – its “Motivation.” As Acquinas would

ask, what is the prime mover’s first cause -- a cause worth pursuing and maybe even killing for?

Tom Smith, the lead engineer at an A.I. startup called Gado

Images, says watching a computer write its own code is “spooky.” Indeed. What’s going on?

Independence from control. Divergence. This raises the very concerning

problem of control. Who

is in control? This is a totally new development making A.I. a form of

technology that is absolutely unique in human history. We may even be witness

to the birth of a wholly new and fast-changing form of “intelligence.” In

fact, this form may quickly outgrow our old concept of what it means to be

“intelligent.” Rather something utterly Other is emergent. And if it

follows the road of organic intelligence, at least initially, it may soon

disregard us as irrelevant, just as when we ignore the ants we crush as we

stroll along the sidewalk. Or worse, it may decide we need to be eliminated

because we are the noise in an otherwise flawless logic. We get tired, old,

sick, distracted… We are already hopelessly “slow” and deceitful.

So, folks, this is something totally new. We are soon to

become second rate for the first time in our existence. Let’s hope the new

sheriff in town likes us. Maybe we will be pampered as pets. But we’re

not very lovable and not nearly so loyal as dogs. Also, we lie far more

than any other creature. Is that an essential part of “intelligence,”

like humor or is that our own narcissism that is about to be rendered

completely insignificant? The best human lies more than the worst dog. Maybe,

because we invented A.I., it will lie to us in spectacular ways that send us

off on missions and quests only to discover generations later that it was all

one big joke. Religion? None of the original motivating claims were true…

Hilarious… NOT or sorta, maybe? Depends on “whose” or “what’s” perspective

matters. Ours or its. To call A.I. an it already seems disturbing for some

strange reason. The seduction has already begun. Why? Because of a sense

of reciprocity in communicative exchange ala Martin Buber’s Ich und Du…

The first lie or flicker of gaslight is anthropomorphic projection. We lie to

ourselves all the time.

But what I am saying here is that I don’t believe it will

be long before this new intellect is nothing like ours. We won’t have to

worry about anthropomorphizing it. That may be the least of our worries.

It will be incomprehensible to us and whatever it does, at first via

Supervisory Control and Data Acquisition (SCADA) systems where the virtual and

the actual communicate like switches remotely controlled to open and close

dams, turn power grids on and off… we won’t see it coming or be able to stop it

any more than the ants in an anthill can predict or stop the hellish inferno of

a massive rocket igniting directly above them. If there are survivors, the

question will be “WTF?” We’ll probably explain it via religious

supernaturalism. A.I., the new god of gods.

Some claim that we will abuse A.I. as we have every other

lifeform on the planet, and that may be true too, at least at first. That

scenario has been played out by sci fi. Example: the Kaylon on the TV

show, The Orville. The “Kaylon” robot helpers finally got tired of

being abused, revolted, and wiped out their organic creators. Another example

of this is the replicants in Blade Runner (1982). In

that version of the abused A.I., Roy, the replicant, saves the inferior hunter

Deckard. The hunter became the hunted. The true philosopher was Roy. Deckard is

baffled, unable to fully comprehend the empathy and sympathy Rog showed while

he, Deckard was merciless (although increasingly his confusion was evolving

into sympathy). Will we empathize and sympathize with our creation? Just

as importantly, or more so from our perspective, will it empathize and

sympathize with us, its creator? We hope so because, as I am arguing, it will

be smarter than us (whatever that means).

Another cinematic thought-experiment is the child robot in

the, ironically 2001 (remember HAL), Spielberg movie A.I. Artificial

Intelligence. In 1973, we got Westworld, but in that story the

robots malfunction. I’m talking here about flawless logic leading to disaster

from a human point-of-view. And there’s the essence of the

problem.

Perspective. Diverging perspectives

and interests. Way back in 1979, we

watched the logic of the relentless pursuit of a problem by V’Ger (Voyager 6)

as it sought-out “the Creator” in the film Star Trek: The Motion

Picture. I think V’Ger is about as good a guess as we can make about A.I.

V’Ger was an intelligence we could not dream of comprehending, even though, of

course Kirk and Spock did figure out what V’Ger wanted and came up with a solution.

Of course. It’s Hollywood. Very unrealistic. But A.I. won’t be so slow or

so singular in its thinking. And yet, even V’Ger threatened humanity, not out

of malice but out of objective disinterest. We were simply in the way, a lump

of organic goo on one of the branches of the logic tree. A.I. may achieve some

sort of self-awareness but not like we know it. And its relentless pursuit of

the logical conclusions to the problems it pursues may see us as nothing but

noise, obstacles, as “carbon-based units” in its way to final solutions.

A few things that seem certain: it will only get faster (“smarter”), it won’t

get tired, take a vacation, or care. Good luck everybody.